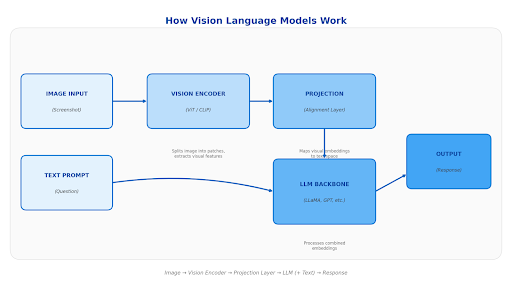

Vision language models (VLMs) are multimodal AI systems that combine computer vision and natural language understanding, allowing machines to see and reason about visual interfaces the way humans do.

68% of engineering teams say test maintenance is their biggest QA bottleneck. Not writing tests. Not finding bugs. Just keeping existing tests from breaking. This isn't a tooling problem. It's a fundamental limitation of how traditional test automation sees the world.

According to industry research, organizations report maintenance challenges as the primary bottleneck in mobile test automation scalability. The culprit? Locator-based testing is a two-decade-old approach that treats your app like a collection of XML nodes rather than what it actually is: a visual interface designed for human eyes.

This is where vision language models come in. Emerging from the same AI shift that gave us ChatGPT, they move beyond text-only intelligence and are poised to transform how we test mobile applications.

Overview

- The global AI market is projected to grow from $391 billion in 2025 to nearly five times that by 2030, with VLMs driving much of this expansion.

- Vision Language Models combine computer vision with natural language processing, enabling AI to understand screens the way humans do.

- Traditional locator-based testing breaks when UIs change; VLM-based testing adapts automatically.

- Enterprises deploying VLM-powered automation report up to 60% reduction in manual workflow time.

- Early adopters are achieving 10× faster testing cycles and 97% test accuracy.

The Evolution: From LLMs to VLMs

Large Language Models like GPT-4 and Claude demonstrated that AI could understand context and reason through complex problems. But they shared a fundamental limitation they were blind.

Vision language models (VLMs) remove that constraint by combining language understanding with computer vision A vision encoder processes screenshots into numerical representations, which are then aligned with a language model's embedding space. The result is AI that can see app screens, understand visual context, and reason about UI state, much like a human tester.

This shift matters because software is visual. Interfaces change, layouts move, and meaning is often conveyed through placement, color, and hierarchy, not text alone. VLMs are designed for that reality.

The global vision language model is now estimated to surpass $50 billion, with annual growth above 40%. The takeaway is simple: AI systems that can’t see are increasingly incomplete.

How VLMs Work

Modern vision language models (VLMs) follow three primary architectural approaches, each balancing performance, efficiency, and deployment needs.

- Fully Integrated (GPT-4V, Gemini): Process images and text through unified transformer layers. This approach delivers the strongest multimodal reasoning and contextual understanding, but comes with the highest computational cost.

- Visual Adapters (LLaVA, BLIP-2): Connect pre-trained vision encoders to LLMs via projection layers. They strike a practical balance between performance and efficiency, making them popular for research and production use.

- Parameter-Efficient (Phi-4 Multimodal): Designed for speed and efficiency, these models achieve roughly 85–90% of the accuracy of larger VLMs while enabling sub-100ms inference, making them suitable for edge and real-time deployments.

Beyond architecture, VLMs are trained using a combination of techniques:

- Contrastive learning, which aligns images and text into a shared embedding space

- Image captioning, where models learn to generate descriptions from visual inputs

- Instruction tuning, enabling models to follow natural-language commands grounded in visual context

- CLIP’s training on over 400 million image text pairs laid the foundation for modern zero-shot visual recognition and remains central to how many VLMs learn to generalise across tasks.

VLM Landscape

Open Source models now perform within 5-10% of proprietary alternatives while offering fine-tuning flexibility and eliminating per-call API costs.

Key Benchmarks

For mobile testing, the critical capabilities are UI element recognition, OCR accuracy, spatial reasoning, and visual anomaly detection.

Why Traditional Mobile Testing Breaks

Traditional mobile test automation was built for static interfaces. Modern mobile apps are anything but.

The Locator Problem

Every mobile test automation framework depends on locators to identify UI elements. This creates cascading problems:

- Fragility: A developer refactors a screen, and tests break even when the app works perfectly.

- Maintenance burden: Teams spend more time fixing tests than writing new ones.

- Platform inconsistency: Android and iOS handle UI hierarchies differently, doubling maintenance work.

The Flaky Test Epidemic

Flaky mobile tests pass sometimes and fail other times, eroding trust in automation and wasting engineering time. Timing issues, race conditions, and dynamic elements cause unpredictable failures.

Research shows self-healing approaches can reduce flaky tests by up to 60% VLM-based testing goes further by understanding visual state rather than relying on element presence.

The Coverage Gap

Traditional automation is good at catching crashes and functional errors. It consistently misses visual bugs.

Layout shifts, alignment issues, missing UI elements, and subtle regressions often slip through to production where users notice them immediately. These are visual failures, not logical ones, and locator-based tests aren’t built to see them

How Vision Language Models Transform Testing

(VIDEO TO BE ADDED)

Vision language models change mobile testing by shifting automation from element-based assumptions to visual understanding. Instead of interacting with UI through locators, VLM-powered testing agents reason about screens the way humans do, based on appearance, context, and layout.

Understanding Screens Like Humans

A VLM-powered testing agent receives a screenshot and interprets it holistically. It recognizes buttons, text fields, and navigation elements based on visual appearance and spatial context, not XML attributes.

When you instruct the agent to "tap the login button," it locates the button visually. If the button moves or gets a new ID, the test still works because the AI adapts to what it sees and not what it expects

Research on VLM-based Android testing shows:

9% higher code coverage compared to traditional methods,

detection of bugs that would otherwise reach production.

This visual-first approach removes entire classes of brittle failures.

Natural Language Test Instructions

With vision language models, test creation shifts from writing code to describing intent.

"Tap on Instamart"

"Tap on Beverage Corner "

"Add the first product to cart"

"Validate that the cart price matches the product price"

The VLM interprets these instructions, identifies UI elements visually, and executes actions accordingly. This lets anyone on your team contribute to test coverage without any deep automation expertise.

Handling Dynamic UIs

Modern mobile apps are dynamic by design. Popups, A/B tests, personalised content and asynchronous loading are the norm.

VLM-based testing handles all of it gracefully. Because the model reasons about current visual state, it adapts to UI variations instead of failing when the structure changes. Tests remain stable even as the interface evolves.

Traditional Automation Misses

VLMs detect bugs that traditional automation misses entirely. Research shows VLM based systems identifying 29 new bugs on Google Play apps that existing techniques failed to catch, 19 of which were confirmed and fixed by developers. These are the kinds of issues users notice immediately, but locator-based tests rarely catch.

Getting Started with VLM-Powered Testing

Adopting vision language models doesn’t require reworking your entire automation strategy. Teams typically start small, prove stability, and expand coverage from there.

Start with Critical Journeys

Identify 20-30 critical test cases covering your most important user flows.These are the tests that break most often and create the most CI noise.

Vision AI platforms can get these running in your CI/CD pipeline within a day, giving teams early confidence without a long setup cycle.

Write Tests in Plain English

With VLM-based testing, test creation shifts from code to intent. Instead of writing locator-driven scripts like:

driver.findElement(By.id("login_button")).click()

describe the action naturally:

"Tap on the Login button."

Vision language models interpret these instructions, identify UI elements visually, and execute the steps. This makes tests easier to write, easier to review, and easier to maintain over time.

Integrate with Existing CI/CD

VLM-powered mobile testing fits into existing pipelines without friction. Most platforms integrate with tools like GitHub Actions, Jenkins, CircleCI, and other CI systems.

Upload your APK or app build, configure your tests, and trigger execution on every build. Because tests rely on visual understanding rather than brittle locators, failures are more meaningful and easier to diagnose.

Metrics That Matter

Why Vision AI Beats Other AI Testing Approaches

Not all AI testing is created equal. Many platforms claim "AI-powered" testing but rely on natural language processing of element trees or self-healing locators that still break.

Vision AI takes a fundamentally different approach

NLP based automation tool

still parse the DOM and use AI to generate or fix locator-based scripts. When the underlying structure changes dramatically, they struggle.

Self-healing locators Frameworks

Self-healing locators improve on traditional automation by automatically fixing broken selectors This helps with minor changes, such as renamed IDs or small layout shifts.

Vision AI Based Testing

Vision AI understands the screen as a human does: by recognizing buttons, forms, and content by appearance and context, not code structure. Because tests are grounded in what is visible, not how elements are implemented, this approach eliminates locator dependency altogether. Tests remain stable even as UI structure evolves.The difference shows in the numbers. While other platforms report 60-85% reductions in maintenance time, Vision AI achieves near-zero maintenance because tests never relied on brittle selectors in the first place.

Drizz: Vision AI-Powered Mobile Testing

Drizz brings Vision AI testing to teams who need reliability at speed. Our agent understands screens the way humans do.

- Plug & Play: Upload your APK, launch tests in seconds. Zero locator configuration.

- Plain English Tests: Describe tests naturally. Drizz’s Vision AI interprets intent and executes actions visually.

- Tests That Don't Break:Dynamic UIs, popups, and A/B variants are handled automatically as the interface changes.

- Precision Debugging: See exactly what happened during execution with screenshots and detailed logs.

Drizz guarantees getting your 20 most critical mobile test cases running in CI/CD within a day, so teams can validate reliability early and scale with confidence.

Conclusion

Vision language models address the brittleness, maintenance burden, and coverage gaps that have limited mobile test automation for years. By grounding tests in visual understanding rather than brittle locators, VLM-based testing delivers higher stability, broader coverage, and far lower maintenance over time.

The technology is mature, the results are measurable, and early adopters are already seeing a clear advantage in how reliably they test mobile applications.

Ready to see vision AI powered mobile testing in action? Schedule a demo and get your critical tests running within a day.